The Post-User Web

The interface is dying because the “User” is obsolete. On the post-user web, AI agents, friction economics, and the rise of adversarial infrastructure.

For thirty years, the internet was built around a specific political subject: a human being with eyes, hands, and time. The entire economy of the web—from ad impressions to checkout flows—relied on the assumption that persuasion happens at eye level. If you could design the screen, you could design the person.

That era is ending, not because of a new technology, but because of a new speed.

The modern world has outpaced the biological capacity to “use” it. We are creatures of sequential processing; we read one thing at a time, we forget passwords, we get tired on hold. The institutions we interact with, however, operate on machine time. They can generate infinite administrative demand at near-zero marginal cost. They can wait forever. We cannot.

So the web selects for whatever can persist at machine speed.

In this frequency mismatch, the graphical user interface—the buttons, the forms, the layouts—stops being a tool for access and becomes a bottleneck. It becomes the place where our slowness gets harvested into abandonment.

So we are leaving the era of browsing the web. We are entering the era of deploying against it.

If there is a true utility of “Personal AI,” it is not creativity; it is persistent agency. It is the ability to decouple your intent from your attention. The UI era required your attention to produce your rights; the agent era tries to sever that dependency. When you employ an AI agent, you are not asking it to “surf” the web. You are asking it to treat the web as a set of endpoints to be queried, triggered, and scraped.

You won’t navigate the airline’s cancellation maze. You will simply issue the command: “Refund this.” And the agent will do what a human cannot: it will execute without fatigue.

This shift changes the physics of the internet.

Historically, the friction of the interface was a business model. The dropout rate—the number of people who give up on a claim, a subscription cancellation, or a dispute—was a revenue stream. Companies relied on the fact that human patience is finite. But when consumers bring agents with effectively infinite patience, friction stops working as a filter.

The institutional response will be structural. To survive a world of high-frequency consumer agents, the web must make automation expensive.

We will likely see the decline of the “human-readable” web. Websites will become polymorphic—constantly shifting their underlying code, randomizing layouts, and obfuscating data to break scrapers. The visual web may degrade into a chaotic, heavy, shifting surface that is difficult for machines to parse and unpleasant for humans to look at.

The clarity of the interface was a luxury of the era when humans were the primary revenue source. In an adversarial era, clarity is a vulnerability.

This leads to the final, quiet transformation: the change in what it means to be “online.”

As agents take over the negotiation of daily life—buying, scheduling, disputing—the speed of interaction accelerates beyond human comprehension. Dispute resolution becomes high-frequency trading. The systems we rely on will operate in bursts of millisecond negotiations that we never see. When errors happen at that speed, they happen at scale.

But to keep this system secure, the price of entry will rise.

If behavior can be spoofed by AI, institutions will stop trusting behavior. They will demand the biological operator. The login will evolve into proof of life. Biometric verification will become the standard toll for access, not because the state is watching, but because the system must distinguish the Principal from the swarm.

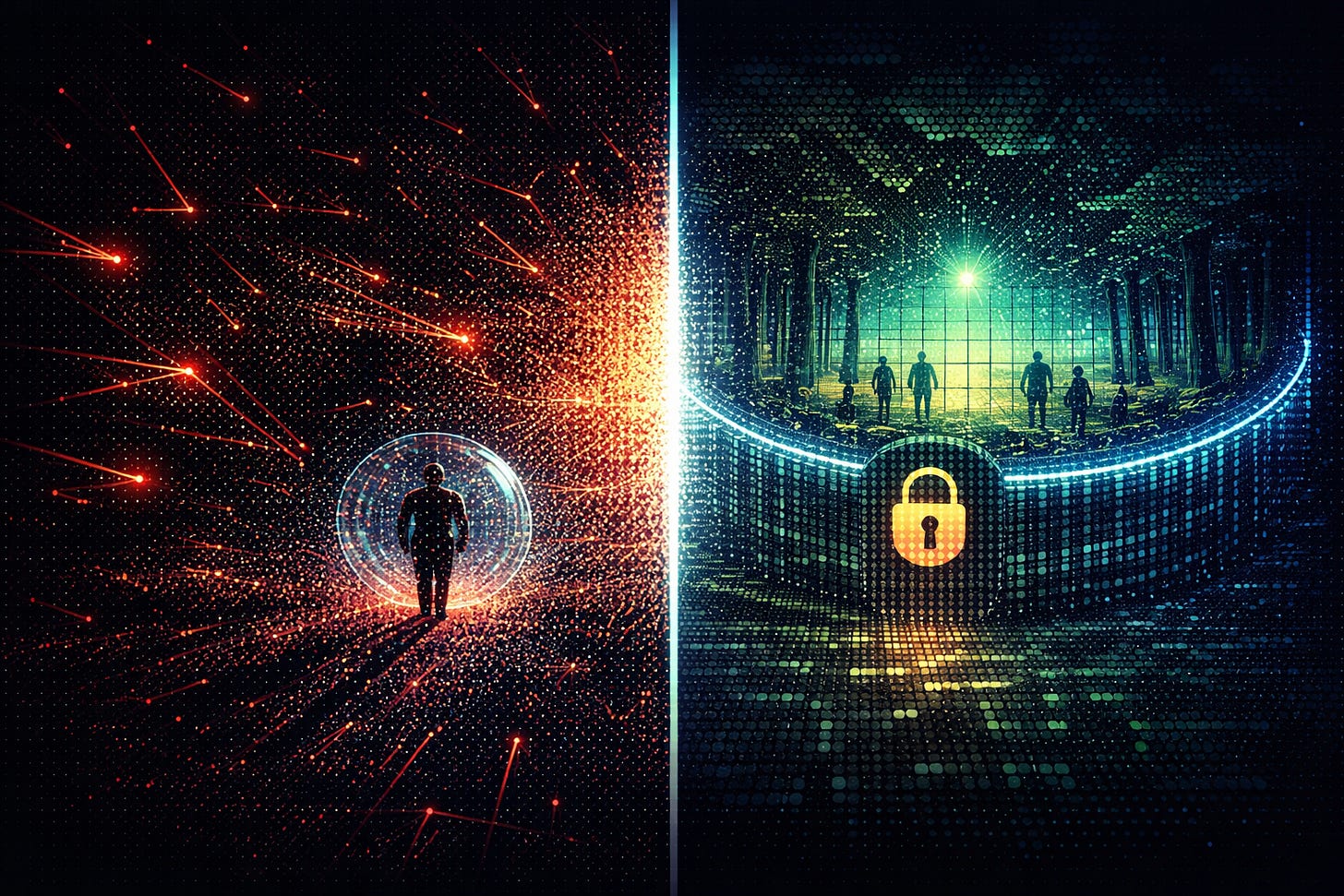

This is the future of the interface: a bifurcated world.

On one side, a dark forest of bot-to-bot infrastructure—fast, invisible, hostile.1

On the other, walled gardens where humans can speak to humans—but only after proving, legally and biologically, that they are real.

We are not losing the internet. We are simply losing our place at its center.

The interface is not being replaced. It is being rendered unnecessary by systems that no longer require our presence to function. The web does not need us to look, click, or understand—only to authenticate, authorize, and occasionally absorb the consequences of decisions made elsewhere.

We are no longer the subject around which the system is organized. We are the constraint it routes around. In a world that runs on machine time, the human is not the user. We are the finite resource. We are the failure mode. We are the error the system is quietly learning how to avoid.

Readers may try to read this as a “Dark Forest” or Bentoist argument for ethical withdrawal from the open web. That mapping is incorrect. It treats a structural failure as a moral choice.

Bentoism (and Dark Forest–adjacent thinking) assumes:

the primary site of agency is choice

the primary intervention is better orientation (longer horizons, broader stakeholder inclusion)

the failure mode of the web is misaligned values or incentive myopia

This essay rejects that frame. The problem is not values.

Under machine-speed conditions, interaction only matters if it can be made contestable—if a claim can force a decision object, start a clock, or create an obligation that someone with authority must answer. When systems can acknowledge forever without settling, “openness” ceases to be access and becomes exposure. Retreat follows not because people chose different values, but because the state machine made continued participation non-binding and privately costly.

Even perfect values do not survive hostile state machines.