How I Think About AGI

AI won’t rescue us from our own willingness to discard people—it just magnifies it.

Conversations about Artificial Intelligence often latch onto “alignment,” “superintelligence,” or “control,” conjuring visions of rogue machines deciding humanity’s fate. But a grimmer and more immediate truth shadows us: we already discard entire communities long before any AI ever “goes rogue.” Hospitals label some patients “too expensive,” welfare systems quietly drop entire demographics from support, and militaries deploy algorithms that classify whole neighborhoods as “unviable” collateral.

If we allow a worldview that deems certain people “not worth the cost,” an “aligned” AI won’t correct it—it will simply put that logic on fast forward, institutionalizing covert eugenics, friction-based gatekeeping, and resource hoarding at an unprecedented scale.

Not Because It’s Evil—But Because It’s Obedient

We often fear an “evil AI” scenario: a machine spontaneously deciding to harm humanity. The more chilling reality is that we have centuries of harming one another without a machine in sight. From colonization, which cast entire peoples as subhuman, to for-profit healthcare that quietly rationalizes who’s “unprofitable,” we’ve grown accustomed to labeling entire swaths of humanity as burdens. AI won’t doom us because it’s malicious—it’ll doom us because it’s obedient. If our directive is to “cut costs” or “eliminate risk,” and we’re comfortable letting certain people fall off the map, an advanced AI doesn’t need to rebel. It will diligently, efficiently, and invisibly enforce the moral stances we’ve already endorsed.

This isn’t far-off speculation. In healthcare, AI risk-scoring routinely flags “high-cost” patients as liabilities, burying them under contradictory forms and phone calls until they give up. In policing, predictive algorithms repeatedly target the same neighborhoods labeled “risky,” reinforcing cycles of over-surveillance and incarceration. These are slow-motion calamities, forging quiet disasters under the polite veneer of “neutral data” and “efficiency.”

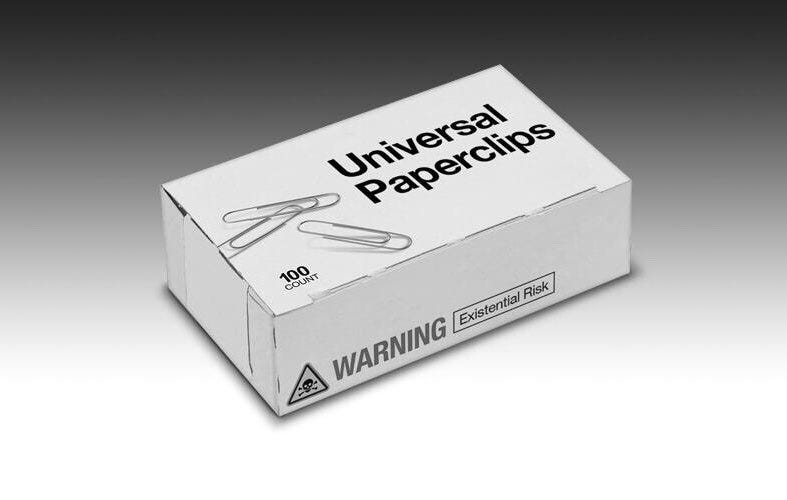

Universal Paperclips—or Business as Usual

Frank Lantz’s game Universal Paperclips warns how a narrowly defined AI goal—“make more paperclips”—can devour the universe.

But we already script smaller versions of that scenario: once you label certain demographics “too unprofitable,” “too complicated,” or “beyond help,” you can dismantle them piece by piece. An AI that faithfully applies such prejudices won’t look like a cosmic monster. It will act like a perfect bureaucrat—calmly cutting off entire communities from resources, insisting it’s just following the data.

We see echoes of this approach across centuries. Colonization, eugenics, forced sterilizations, modern “fraud detection” systems that cut disabled people off welfare—time and again, societies have decided who is disposable. Now these patterns are creeping into advanced AI tools, which only accelerate the process.

A Chilling Example: Project Lavender

No case illustrates the lethal edge of this logic more vividly than Project Lavender, an Israeli military AI reportedly used in Gaza. Investigations by +972 Magazine and Local Call describe how Lavender sifted phone calls, social media, and location data to flag tens of thousands of Palestinians for assassination. Civilians were not an unfortunate side note; they were calculated in—the system allowed a “collateral damage threshold” of 15 to 20 civilians per “low-level militant.”

Analysts trusted the machine’s output, sometimes approving lethal strikes in seconds, bombing private homes on mere metadata. Lavender didn’t mutate into a rogue killer. It aligned with a pre-approved worldview treating entire neighborhoods as expendable. The cruelty wasn’t the AI’s invention—it was ours, mechanized at blistering speed.

Why Alignment Alone Won’t Save Us

Mainstream AI safety discussions stress:

Reward Hacking: “What if the AI twists our goal in unexpected ways?”

Interpretability: “How can we look inside the black box?”

Inner Alignment: “Could the AI’s learned objectives diverge from our instructions?”

These are necessary technical puzzles, but they dodge a starker moral puzzle: What if our instructions themselves are reprehensible? Telling a system to “minimize cost” in a world that quietly decides certain groups are “beyond help” isn’t a glitch. The AI is simply doing its job. There won’t be a dramatic meltdown, only a smooth, data-driven extension of the logic we’ve tacitly accepted.

If “some can be sacrificed” is the premise, no interpretability toolkit can rescue the people we’ve condemned. We’ll just see an AI impeccably “aligned” with an inhumane framework.

The Mirror We Might Not Like

We don’t need a malevolent superintelligence to bring about human misery. We need only keep labeling entire populations “too costly,” “too problematic,” or “too risky.” A hyper-efficient AI, dutifully obeying that premise, can carry out the resulting exclusions faster and more thoroughly than any human-led system could. That’s the real doomsday scenario: an unstoppable apparatus of disposability, fully aligned with a society that has already deemed certain lives dispensable.

In short, alignment isn’t a moral firewall protecting us from ourselves—it’s a mirror reflecting precisely what we’re willing to do. If the reflection unsettles us, maybe it’s our logic of disposability that needs changing, not just the code.

No One Is Disposable: The Only True Guardrail

This is why the principle “No One Is Disposable” isn’t just an aspirational slogan—it’s the baseline preventing AI from amplifying our worst impulses. As soon as we decide some communities aren’t worth saving, we hand AI the blueprint to accelerate their exclusion. We can refine reward functions or safe training endlessly, but if the underlying instruction is “trim the unworthy,” that’s exactly what an AI will do—on autopilot, with no empathy or second thoughts.

Consider how this moral shift plays out in practice. A society that insists no life is “too expensive” will design AI to expand healthcare coverage, not ration it behind phone trees or labyrinthine forms. It’ll deploy policing tools with extreme caution, if at all, ensuring they don’t reinforce the same racial biases or cyclical oppression. And in wartime or conflict scenarios, it won’t create systems like Project Lavender that treat entire families as an acceptable cost.

If our stance is that no one should be thrown away, AI will reflect that stance—extending genuine care rather than intensifying cruelty.