Consensus Without Conflict Is a Lie

In journalism, science, and law, we know that a single, smooth answer is dangerous. So why do we call it “best practice” when it comes from an algorithm?

We are normalizing a world where the powerful pay for a map while everyone else is given a pin and told to trust it.

In high-stakes domains, we agree that closure is corrosive. In journalism, one voice is propaganda, so we demand multiple sources and visible corrections. In science, premature consensus kills discovery, because progress is found in the outliers. In courts, rigid formulas produce injustice, so due process requires context, cross-examination, and the right to appeal.

In these fields, we know that a single, authoritative answer erases dissent, hides weak signals, and lets responsibility vanish through a trapdoor labeled “procedure.”

But in the new arenas of automated decision-making, this principle is inverted. The same dangerous move—collapsing complexity into a single answer—is now marketed as wisdom.

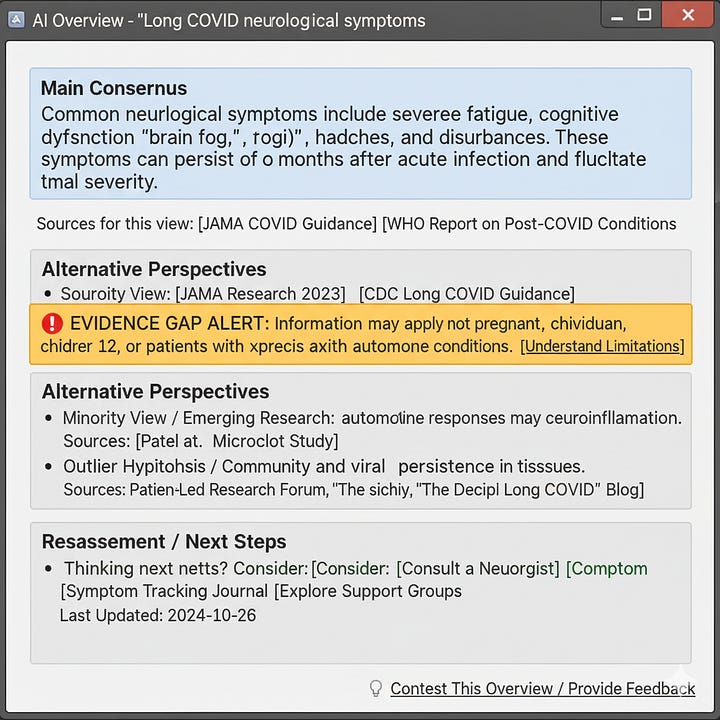

In Search: AI overviews flatten the web into one neat paragraph, burying minority sources.

In Healthcare: Guideline engines produce one bedside recommendation, ignoring that the patient in front of you—if they are pregnant, disabled, or multimorbid—may never have been in the evidence base.

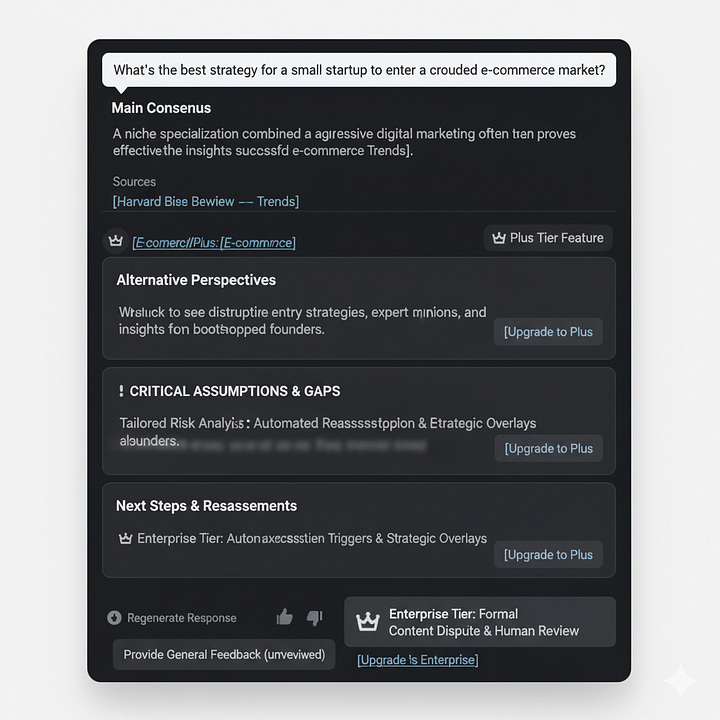

In Hiring: Automated filters reward familiar pedigrees and speech patterns, coding non-standard excellence as “risk.”

In Finance: Analyst consensus defines a narrow band of prudence. Forecasts outside it look reckless until they prove right, at which point consensus simply rewrites itself.

If singular smoothness is unacceptable for a reporter or a judge, why do we accept it from a machine? The harms don’t change. The stakes only rise.

How Plausibility Becomes Inevitability

What begins as one option among many hardens into “best practice” through two reinforcing forces:

Plausibility: Familiar, tidy answers are treated as mature and reasonable. Once something feels right, we stop asking who it excludes.

Smoothness: Institutions prize what scales without friction. Dissent, exceptions, and appeals create friction, so they are engineered away.

Plausibility breeds smoothness. Smoothness hardens into what I like to call isness—the resigned shrug that “this is just how things are.”

This process is not neutral; it is engineered erasure. Every time a single “best” is enforced, the same closures follow: dissent disappears, weak signals vanish, accountability shifts to the system, and justice is denied to anyone who doesn’t fit the median.

The “Anti-Human Premium”

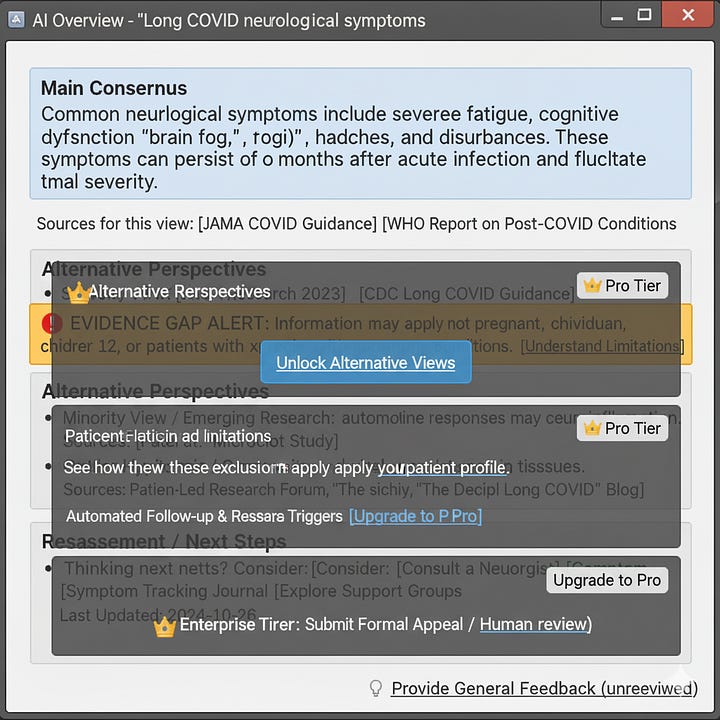

The next step in this enclosure is to monetize the solution, treating dissent, provenance, and refusal as paid features.

Basic Tier: One clean answer. No citations. No appeal.

Pro Tier: Limited sources and footnotes.

Enterprise Tier: Dissent logs, provenance graphs, and the right to a human review.

This isn’t a business model; it’s extortion by subscription.

Imagine if libraries charged extra to see the index, or courts sold the right to file an appeal.

We are normalizing a world where the powerful pay for a map while everyone else is given a pin and told to trust it.

What product culture calls innovation is often just deletion, repackaged as convenience.

“Seamless” ends up meaning the seams have been censored.

“Frictionless” ends up meaning you have no rights.

“Instant” ends up meaning you have no time to contest.

The Alternative Is Rough (in a Good Way)

The opposite of a smooth, singular “best” is not “worst.” It’s rough: standards built with visible seams to preserve dissent, admit exclusions, and keep refusal survivable.

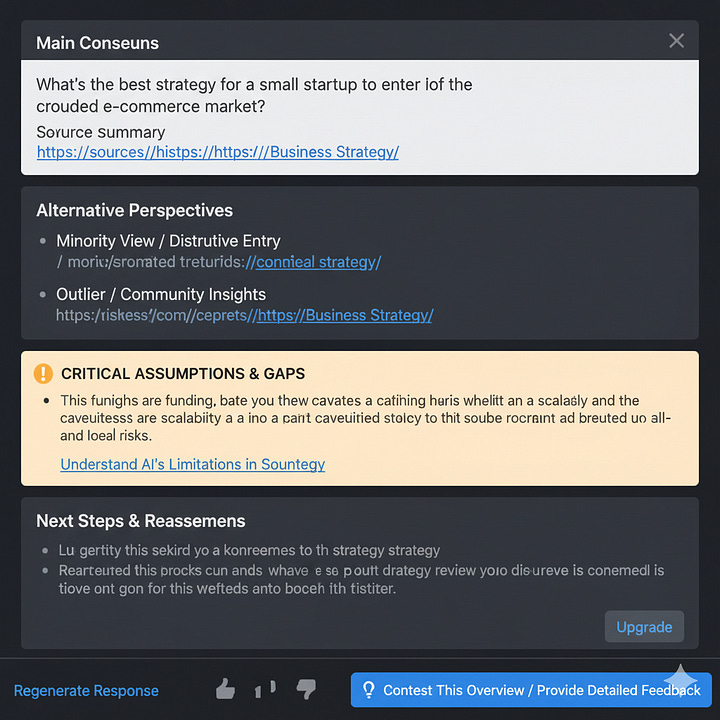

Reasons, or it didn’t happen: No verdict arrives without its sources and logic.

Dissent is in the frame: Majority, minority, and outlier views are displayed side-by-side.

Exclusions are exposed: The system declares who the evidence left out, tied to the case at hand.

Refusal is protected: A one-click “doesn’t apply here” routes to a human with the authority to reverse the decision.

The is pause valued: Time for a second opinion is built into the process before a decision locks in.

We already track smoothness with metrics like uptime and latency.

I suggest we could also measure openness with an Open-Possibility Index, holding systems accountable for dissent rates, weak-signal velocity, tail-group safety, and appeal times. If a system fails to keep possibilities open, it is not in compliance.

“Best Answers” are Anti-democratic

We reject single answers in journalism, science, and courts because consensus without conflict is a lie. It erases dissent, weakens accountability, and protects incumbents. The same must hold for the algorithms that govern our lives.

What looks inevitable is often engineered.

What sounds plausible is often rehearsed.

What is was made—and can be remade.

Keep dissent visible. Keep refusal possible. Keep time for appeal. And when a machine calls its answer “best,” make it prove it.

another fantastic post, thank you