Assume Maximum Awareness

There is a comforting fiction that sustains most institutional reform. It is the belief that the machine is cruel because it is blind.

We tell ourselves that if the executives only saw the burnout rates in the ICU, if the board only understood the bias in the training data, if the policymakers only heard the stories from the front lines, they would act. We treat dysfunction as a knowledge deficit. We assume that the gap between “what is happening” and “what should happen” is a gap in information.

Tragic Institutionalism begins by rejecting this comfort. It asserts a colder, more difficult premise: The system is not blind. It is optimized.

In the digitized, data-saturated landscape of the modern organization, we must assume Maximum Awareness. The institution does not suffer from a lack of visibility into its own harms; it suffers from a calculated decision to tolerate them.

The Industrialization of Sight

The “Ignorance Hypothesis”—the idea that leadership is unaware of ground truths—might have been true fifty years ago. Today, it is logically impossible.

Modern institutions are panoptical. They possess industrialized telemetry that captures the granular reality of their operations in near real-time.

In Healthcare: Hospital administrators do not need a nurse to tell them staffing is dangerous. They have dashboards showing nurse-to-patient ratios, call-light response times, medication error rates, and readmission statistics, all trended against payroll costs.

In Tech: Platform executives do not need a whistleblower to tell them their product harms teens. They have A/B tests, engagement heatmaps, churn models, and sentiment analysis that quantify exactly how much psychological distress correlates with a 5% lift in ad revenue.

In Logistics: Warehouse managers know exactly how many workers are skipping bathroom breaks. The productivity tracking software flags “time off task” down to the second.

The data exists. It is collected, cleaned, stored, and visualized. The problem is not that this data doesn’t reach the top. The problem is what happens when it gets there.

From Knowledge to Optimization

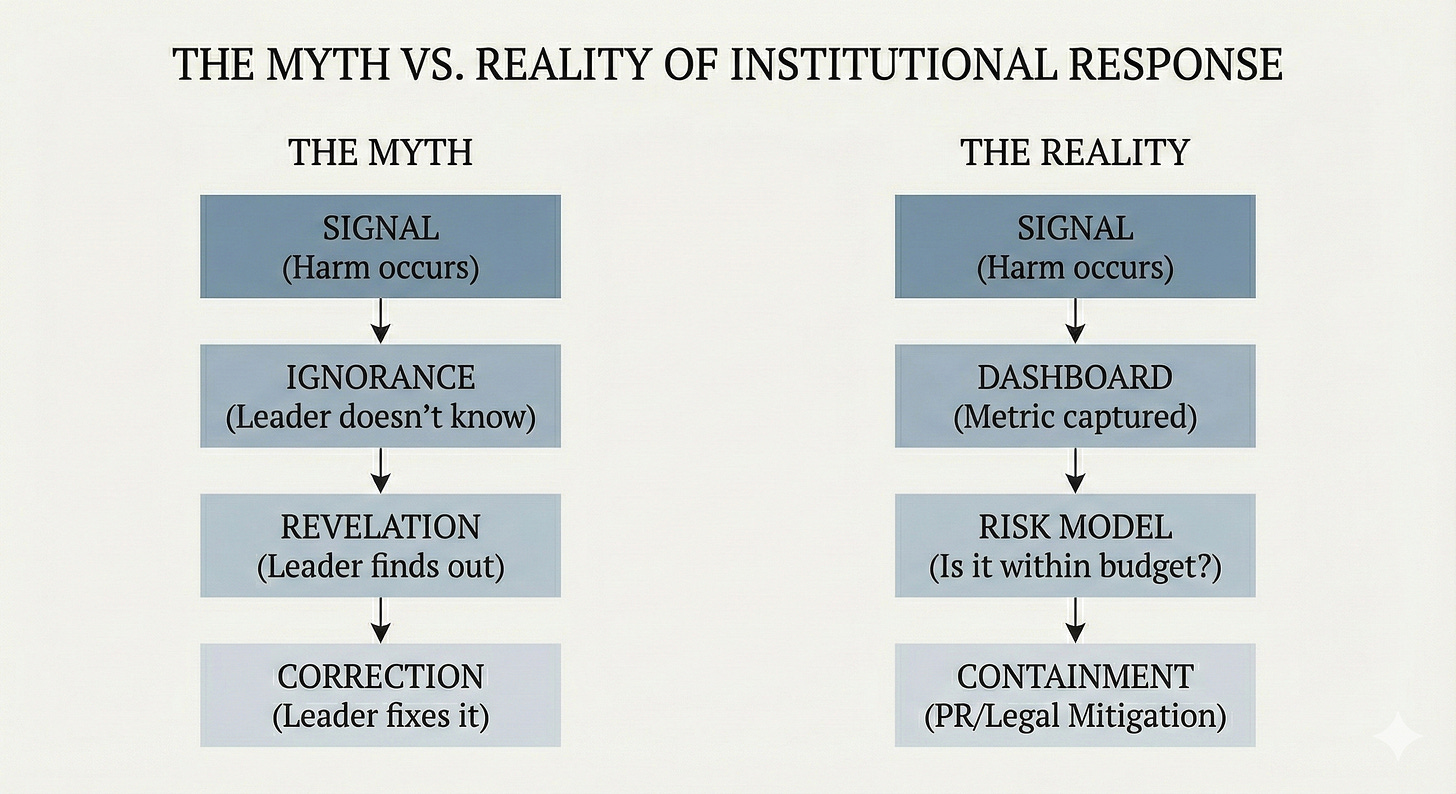

When we assume the problem is ignorance, we assume that Knowledge + Power = Action.

But in a tragic institution, the formula is different: Knowledge + Power = Pricing.

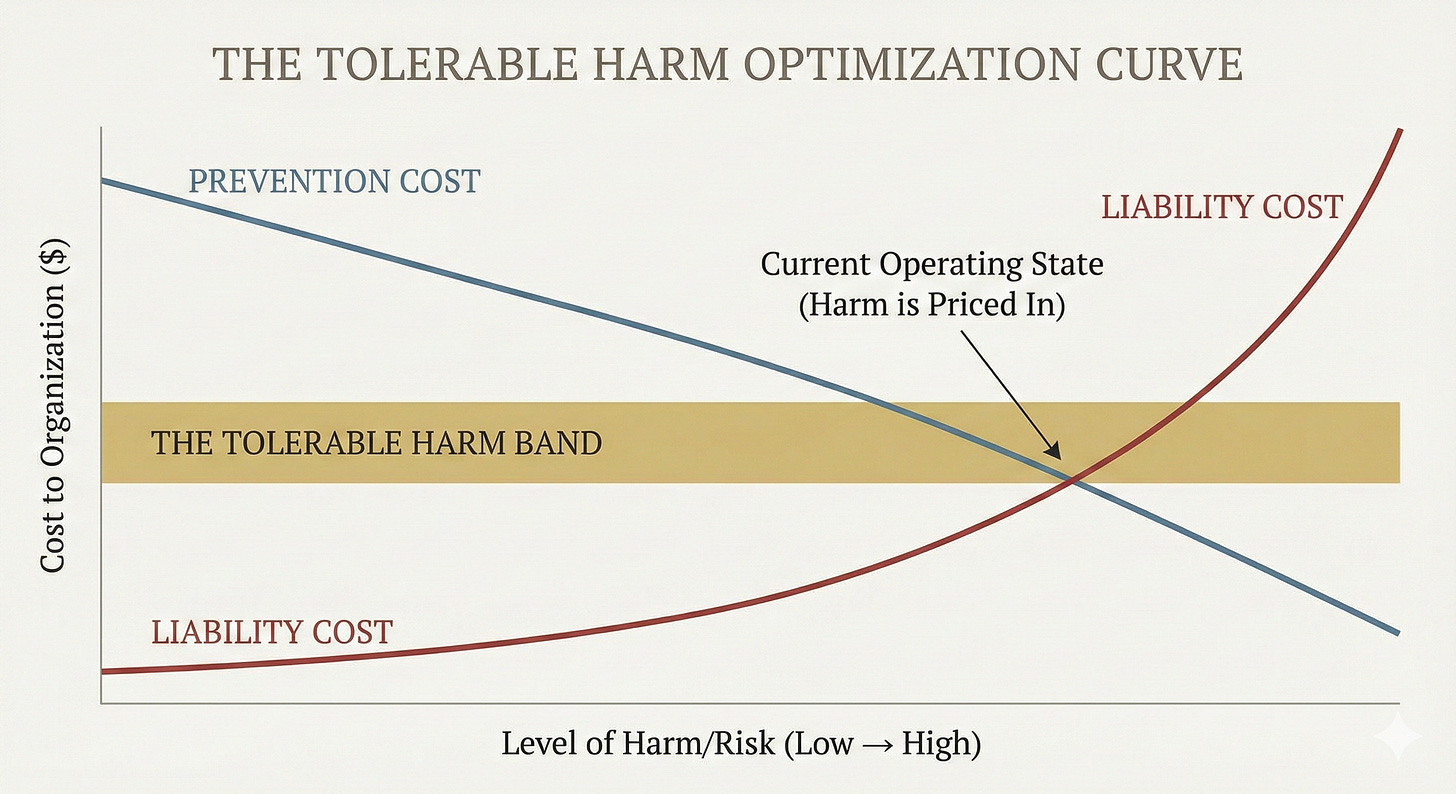

When leadership reviews data on harm, they rarely view it as a moral crisis to be solved. They view it as a variable to be managed. They are not looking for “Zero Harm”; they are looking for the “Tolerable Harm Band.”

They ask the rational questions of their role:

Is this illegal? (Or just “regulatory grey zone”?)

Will this cause a PR crisis? (Or just “low-level complaints”?)

Does the cost of fixing it exceed the cost of settling the lawsuits?

Once the harm falls within the “tolerable” band—where the cost of settlements, attrition, and bad press is lower than the cost of structural change—the harm is “priced in.” It becomes a known operating expense.

The burnout rate isn’t a surprise; it’s a budget line item for recruitment. The safety violations aren’t accidents; they are the calculated yield of an efficiency model.

This is the “banality of optimization.” It is not malicious villains twirling mustaches. It is fiduciaries doing their legal job: maximizing value within the constraints of risk.

The Ritual of Surprise

If institutions are so aware, why do they seem so shocked when scandal breaks?

Because “surprise” is a legal and reputational necessity.

When a crisis goes public—a data leak, a mass walkout, a patient death—leadership performs the Ritual of Surprise. They issue statements about being “deeply concerned.” They launch “independent investigations” to “get to the bottom of this.”

This performance serves two purposes:

Liability Shielding: Admitting “we knew this would happen” creates legal exposure (negligence or malice). Claiming “we were unaware” preserves the defense of incompetence, which is legally safer than complicity.

Preserving the Ignorance Myth: It invites the public and the workforce back into the comfortable fantasy. It suggests, “Now that we know, surely we will fix it.”

It resets the clock. It buys another cycle of “listening tours” and “task forces” that result in performative tweaks rather than structural redesign.

The Strategic Implication: Stop Teaching, Start Negotiating

Why does this matter for the worker?

Because if you believe the Ignorance Hypothesis, you will spend your energy on Education. You will write longer memos, gather more heartbreaking stories, and try to “raise awareness.” You will exhaust yourself trying to teach a lesson that has already been learned.

If you accept the Maximum Awareness hypothesis, you realize that Education is a trap.

You stop trying to “inform” leadership of the costs. You accept that they have already calculated the costs and decided you are going to pay them.

This changes your strategy from Pedagogy to Leverage.

You don’t write a memo about “moral distress”; you file a union grievance about “unsafe working conditions.”

You don’t explain why a product is unethical; you refuse to sign the compliance certificate that allows it to launch.

You don’t appeal to their better angels; you raise the material cost of the status quo until it is no longer “priced in.”

There is no innocence in the modern institution. There is only design.

To assume maximum awareness is painful. It requires admitting that the people in charge are not confused, but comfortable with the damage they are doing.

But it is also liberating. It frees you from the exhausting, futile work of trying to be the “conscience” of a machine that has none. It allows you to see the terrain clearly:

You are not a teacher in a classroom of confused pupils.

You are a negotiator in a room where the other side has already run the numbers against you.